the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Opinion: Why all emergent constraints are wrong but some are useful – a machine learning perspective

Duncan Watson-Parris

Global climate change projections are subject to substantial modelling uncertainties. A variety of emergent constraints, as well as several other statistical model evaluation approaches, have been suggested to address these uncertainties. However, they remain heavily debated in the climate science community. Still, the central idea to relate future model projections to already observable quantities has no real substitute. Here, we highlight the validation perspective of predictive skill in the machine learning community as a promising alternative viewpoint. Specifically, we argue for quantitative approaches in which each suggested constraining relationship can be evaluated comprehensively based on out-of-sample test data – on top of qualitative physical plausibility arguments that are already commonplace in the justification of new emergent constraints. Building on this perspective, we review machine learning ideas for new types of controlling-factor analyses (CFAs). The principal idea behind these CFAs is to use machine learning to find climate-invariant relationships in historical data which hold approximately under strong climate change scenarios. On the basis of existing data archives, these climate-invariant relationships can be validated in perfect-climate-model frameworks. From a machine learning perspective, we argue that such approaches are promising for three reasons: (a) they can be objectively validated for both past data and future data, (b) they provide more direct – and, by design, physically plausible – links between historical observations and potential future climates, and (c) they can take high-dimensional and complex relationships into account in the functions learned to constrain the future response. We demonstrate these advantages for two recently published CFA examples in the form of constraints on climate feedback mechanisms (clouds, stratospheric water vapour) and discuss further challenges and opportunities using the example of a rapid adjustment mechanism (aerosol–cloud interactions). We highlight several avenues for future work, including strategies to address non-linearity, to tackle blind spots in climate model ensembles, to integrate helpful physical priors into Bayesian methods, to leverage physics-informed machine learning, and to enhance robustness through causal discovery and inference.

- Article

(3393 KB) - Full-text XML

- BibTeX

- EndNote

Machine learning applications are now ubiquitous in the atmospheric sciences (e.g. Huntingford et al., 2019; Reichstein et al., 2019; Thomas et al., 2021; Hess et al., 2022; Hickman et al., 2023). However, there is not a single recipe for machine learning to advance the field. Prominently, there is an important distinction between machine learning for weather forecasting (Dueben and Bauer, 2018; Rasp and Thuerey, 2021; Bi et al., 2023; Lam et al., 2023; Kurth et al., 2023; Bouallègue et al., 2024) and machine learning for climate modelling (Watson-Parris, 2021). In weather forecasting, the aim is to predict a relatively short time horizon over which any new influences of climate change are typically negligible. In stark contrast, the science of climate change is interested in how changing boundary conditions – i.e. anthropogenic changes in climate forcings such as carbon dioxide (CO2) or aerosols – will affect Earth's climate system on long timescales. The need to go beyond what has previously been observed poses specific, hard challenges to the application of machine learning in climate science. It is the classic differentiation that is often coined as “ML models are good at interpolation (weather forecasting) but not at extrapolation (climate change response)”. As a result, machine learning in climate science has also largely focused on interpolation sub-tasks such as climate model emulation to speed up additional scenario projections (Mansfield et al., 2020; Watson-Parris et al., 2022; Kaltenborn et al., 2023; Watt-Meyer et al., 2023) or faster and better machine learning parameterizations for climate models (Nowack et al., 2018a, 2019; Rasp et al., 2018; Beucler et al., 2020). In this opinion article, we highlight a few ideas regarding how machine learning can, nonetheless, help reduce the substantial modelling uncertainties in climate change projections, addressing a major scientific challenge of this century. Specifically, we will focus on the example of observational constraint frameworks (Ceppi and Nowack, 2021; Nowack et al., 2023).

In the remaining sections of the Introduction, we first briefly review the concept of model uncertainty, as well as current observational constraint methods, including some of their limitations. In Sect. 2, we discuss controlling-factor analyses (CFAs) using linear machine learning methods as an alternative approach to observational constraints. We highlight several advantages, exemplified for the cases of constraints on global cloud feedback and stratospheric water vapour feedback. In Sect. 3, we discuss key challenges in constraining future responses on the basis of present-day data, particularly non-linearity and confounding. We illustrate these using the example of constraining the effective radiative forcing (ERF) from aerosol–cloud interactions. In Sect. 4, we highlight potential avenues for future work and for addressing model uncertainty with machine learning frameworks more generally. In Sect. 5, we summarize key ideas for observational constraints and suggest that machine learning ideas could also help to improve climate model tuning frameworks in the future.

1.1 Model uncertainty

Three sources of climate model projection uncertainty are commonly distinguished (Hawkins and Sutton, 2009; Deser et al., 2012; O'Neill et al., 2014):

-

scenario uncertainty, given different anthropogenic emission scenarios of greenhouse gases and aerosols (typical scenarios range from strong mitigation of climate change to unmitigated growth of emissions);

-

internal variability uncertainty due to noise from climate variability superimposed onto any scenario-driven trends (for example, any given year might be colder or warmer than the climate-dependent expected average value for temperature);

-

model uncertainty arising from varying scientific design choices for climate models developed by different institutions (for example, climate models can differ in terms of which and how specific processes are represented, including parameterizations of cloud processes, aerosols, and convection (Carslaw et al., 2013a, b; Sherwood et al., 2014, 2020; Kasoar et al., 2016; Bellouin et al., 2020), or in their representations of the carbon cycle and atmospheric chemistry (Cox, 2019; Nowack et al., 2017, 2018a)) – ultimately, the resulting model uncertainty describes the long-term projection uncertainty in, for example, regional surface temperature or precipitation changes under the same emissions scenario.

Despite decades-long model development efforts, model uncertainty in key climate impact variables such as temperature and precipitation, globally and regionally, has remained stubbornly high (Sherwood et al., 2020; IPCC, 2021). The apparent lack of net progress might be the result of the competition between (a) improved individual process representations in climate models and (b) the continuously growing number of (uncertain) climate processes being considered in the first place (Cox, 2019; Eyring et al., 2019; Saltelli, 2019). Whatever the reason may be, empirically, we probably need to accept large inter-model spread in climate change projections for the foreseeable future.

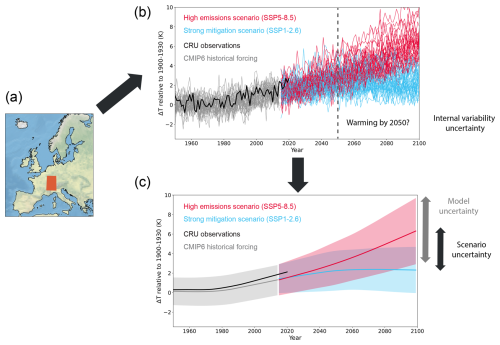

In Fig. 1, we illustrate the three uncertainty contributions for temperature projections for an area in central Europe. Scenario and model uncertainties clearly start to dominate over time, whereas at the beginning (around the years 2014–2030), internal-variability uncertainty renders even very different forcing scenarios difficult to distinguish. In climate science, scenario and internal-variability uncertainties are often taken as a given. To characterize scenario uncertainty, it is common to consider a range of socioeconomic development pathways, from strong-mitigation scenarios with a target of, for example, less than 2 °C global warming to high-forcing business-as-usual scenarios (O'Neill et al., 2016). Internal-variability uncertainty, in turn, is usually characterized by considering multiple ensemble members for the same climate model and forcing scenario (Sippel et al., 2015; O'Reilly et al., 2020; Labe and Barnes, 2021; Wills et al., 2022). In this paper, we focus on methods that tackle model uncertainty.

Clearly, in order to make meaningful climate risk assessments, society and policymakers require better (more certain) information than the range of raw model ensembles are currently able to provide (Fig. 1). Here, we will suggest a machine learning perspective on this challenging yet important task, contrasting and comparing our view to other concepts to observationally constrain model uncertainty (e.g. Knutti, 2010; Eyring et al., 2019; Hall et al., 2019; Williamson et al., 2021). Our viewpoint still shares the fundamental idea that, from the complexity of many small- and large-scale processes involved in the climate system, relatively simple relationships may emerge over time and space. These simple relationships may then be used to robustly compare climate model behaviour to observed relationships so as to distinguish more realistic models from the rest (Allen and Ingram, 2002; Held, 2014; Huntingford et al., 2023) without having to constrain each microphysical and macrophysical process individually.

Figure 1Surface air temperature climate model projections and observations for a 5° × 5° grid box in central Europe. The region is indicated in orange in (a). The raw projections, relative to their 1900–1930 average, are shown for 34 Coupled Model Intercomparison Project phase 6 (CMIP6) models in (b). Grey lines show one ensemble member of each model for simulations under historical forcing conditions. The same ensemble members and CMIP6 models are shown for the years 2014 to 2100 under a high-emission (red) and a strong-mitigation scenario (blue). SSP stands for Shared Socioeconomic Pathway. Observational data according to the Climatic Research Unit (CRU, version TS4.05, Harris et al., 2020) are shown in solid black. In (b), internal-variability uncertainty across the 34 simulations makes it difficult to, for example, answer the question of how much the region is projected to have warmed by the year 2050, even in the absence of model uncertainty. This uncertainty could be smoothened out by considering the average over multiple ensemble members for each model (not done here). Instead, we applied a Lowess smoothing to approximately remove internal variability and indicate the remaining ±2σ intervals for each scenario in (c). This, in turn, highlights more clearly the scenario uncertainty, best exemplified by the differences in the multi-model means provided as the central solid lines in (c). Finally, the model uncertainty – i.e. the spread in projections for a given scenario after removing internal-variability uncertainty – makes an evidently large contribution to the projections here. For example, for the high-emission scenario, model responses range between approximately 3 and 10 K of warming by 2100.

1.2 Methods to address model uncertainty

As mentioned above, international climate model development efforts have not resulted in reduced model uncertainty over time (e.g. Zelinka et al., 2020). To address this longstanding issue, a variety of approaches have been suggested to evaluate climate models and to weight their projections, particularly through systematic comparisons of the modelled climate statistics and relationships against those found in Earth observations. Current methods can be broadly separated into two major groups: (a) statistical climate model evaluation approaches and (b) emergent constraints.

1.2.1 Statistical model evaluation frameworks

There are several widely used frameworks that use a defined set of standard statistical measures to compare model behaviour to observations. Model projections are, for example, weighted by performance measures relating to historical trends and variability in key variables such as temperature or precipitation (e.g. Giorgi and Mearns, 2002; Tebaldi et al., 2004; Reichler and Kim, 2008; Räisänen et al., 2010; Lorenz et al., 2018; Brunner et al., 2020a, b; Tokarska et al., 2020; Hegerl et al., 2021; Ribes et al., 2021, 2022; Douville et al., 2022; Qasmi and Ribes, 2022; Douville, 2023; O'Reilly et al., 2024), and similar approaches have been suggested in atmospheric chemistry (Karpechko et al., 2013). In addition, methods to account for model interdependencies (due to shared model development backgrounds or components) in these weighting procedures have been proposed (Bishop and Abramowitz, 2013; Abramowitz and Bishop, 2015; Sanderson et al., 2015, 2017; Knutti et al., 2017; Abramowitz et al., 2019).

A disadvantage of many conventional model evaluation approaches is that past statistical measures used to compare models to observations (e.g. standard deviations or climatological means and trends) are not necessarily good indicators if one can rely more on a specific model's future response. Instead, a model that performs worse on certain past performance measures might actually be more informative about the true future response. Simple historical performance scores can be blind to offsetting model biases (Nowack et al., 2020) and could even be targeted by model tuning (Mauritsen et al., 2012; Hourdin et al., 2017), for example, to better match historical temperature trends. From a machine learning perspective, this could lead to situations akin to overfitting training data (apparent skill based on historical data used to tune climate models). The same model might – as a result – actually be less informative or predictive in new situations (in this case, under climate change).

Overall, due to the indirect link between historical performance measures and future responses in conventional model evaluation frameworks, it is not clear a priori which of the evaluation methods to trust most. This point was demonstrated in the review by Hegerl et al. (2021). Basically, different weighting approaches provide different constraints (in terms of both median and uncertainty ranges), and it remains difficult to establish which approach to trust most and to find ways to make them directly comparable. Another practical limitation is that standard methods used to constrain climate change projections are typically based on relatively large-scale spatial and long-term temporal averaging to find significant correlations between historical climate model skill and future projections. Constraining climate change projections of extreme events is consequently even more challenging (Sippel et al., 2017; Lorenz et al., 2018).

1.2.2 Emergent constraints

“The emergent constraint approach uses the climate model ensemble to identify a relationship between an uncertain aspect of the future climate and an observable or variation or trend in the contemporary climate” (Williamson et al., 2021). Compared to statistical model evaluation criteria, emergent constraints more directly target relationships between shorter-term variability within the Earth system (“observables”, e.g. seasonal-cycle characteristics, observed trends, and other aspects of internal and interannual variability) and future climate change, even under strong and century-long climate forcing scenarios (see also review papers by Hall et al., 2019; Eyring et al., 2019).

Among the prominent examples are proposed constraints on changes in snow albedo (Hall and Qu, 2006), the highly uncertain cloud feedback and equilibrium climate sensitivity (Sherwood et al., 2014; Klein and Hall, 2015; Tian, 2015; Brient and Schneider, 2016; Lipat et al., 2017; Cox et al., 2018; Dessler and Forster, 2018), climate-driven changes in the hydrological cycle (O'Gorman, 2012; Deangelis et al., 2015; Li et al., 2017; Chen et al., 2022; Shiogama et al., 2022; Thackeray et al., 2022) and in the carbon cycle (Cox et al., 2013; Wenzel et al., 2014; Cox, 2019; Winkler et al., 2019a, b), wintertime Arctic amplification (Bracegirdle and Stephenson, 2013; Thackeray and Hall, 2019), marine primary production (Kwiatkowski et al., 2017), permafrost (Chadburn et al., 2017), atmospheric circulation (Wenzel et al., 2016), and mid-latitude daily heat extremes (Donat et al., 2018).

A central hypothesis of emergent constraint definitions is that a measure of historical, already observable climate can consistently be linked to future responses. A classic example is the correlation between the contemporary seasonal-cycle amplitude of snow albedo and the long-term snow albedo climate feedback under climate change (Hall and Qu, 2006). Of course, the latter is only available from climate model simulations (i.e. it is “unobserved”) so that the correlation between the past and future quantity can only quite literally “emerge” across large climate model ensembles of historical and future scenario simulations. In comparison, CFAs described later will also use climate model ensembles to validate a climate-invariance property on which they are based. However, they do not rest on as strong assumptions as is the case for emergent constraints and can be evaluated in terms of their predictive skill on both historical (observations and climate model simulations) and realizations of future data (model simulations).

1.3 Limitations of current constraint frameworks

The challenge to constrain future projections on the basis of observations is a difficult one. Any attempt to establish robust relationships between the (observable) past and simulated future (unobservable) will be hampered by the non-stationary nature of the climate system. Any information content that can be gained from observations will, naturally and intuitively, diminish as the climate changes. In addition, once relationships of this kind have been put forward, the various methods discussed in Sect. 1.2 typically lead to different suggested constraints for median climate change responses and confidence intervals (e.g. Brunner et al., 2020a; Hegerl et al., 2021). This raises the next central question: which of the methods should we trust (most)? By any means, this is not a small question considering the significant possible impacts associated with future changes in climate.

We identify three broad issues which make progress on this central question particularly difficult and which we suggest can be addressed by incorporating machine learning ideas into observational constraint frameworks. Further limitations are discussed in Sect. IV of Williamson et al. (2021). The three we wish to highlight here are as follows:

-

The indirect nature of the link between the past performance measures and the future response to be constrained. While, nowadays, most emergent constraints are suggested together with a plausible theoretical link between the observable measure and the future response (Williamson et al., 2021), the connection is always indirect (Caldwell et al., 2018). In many cases, this might, indeed, lead to a scientifically robust relationship; however, this robustness is, in practice, difficult to evaluate objectively. Clearly, the situation is not very different in model evaluation methods which, for example, aim to correlate the historical model-consistent standard deviation in precipitation with its future response. The indirect nature of these links means that one can attempt to manipulate x (the “observed”) in models to better match the observational record. If this leads to the desired improvement in y (i.e. the simulated response) then that would be a targeted way to improve climate models. However, there is clearly no guarantee that apparent improvements in modelling historical x will translate into constrained future responses (Hall et al., 2019).

-

Low dimensionality equals oversimplification? The reliance on a few, relatively simple, historical performance measures could be argued to have played a key role in limiting progress to date, even if they have the advantage of being relatively easy to conceptualize. For example, it is hard to imagine that very simple measures can truly reflect the complexity of the climate system driving model uncertainty (Caldwell et al., 2018; Bretherton and Caldwell, 2020; Schlund et al., 2020; Nowack et al., 2020). A natural focus on the best-performing of the resulting constraints, even if linked to plausible physical mechanisms, will likely overfit the relationships between past model performance and projected change, returning back to point 1. In addition, the constantly ongoing quest to find such relationships is somewhat akin to issues with multiple-hypothesis testing in statistics, which directly leads us to point 3.

-

Risk of data mining correlations. A key concern with regard to identifying relationships such as emergent constraints, which seek strong correlations between a past (uncertain) observable and future (uncertain) responses across climate model ensembles, lies in the inherent risk of correlations that arise (largely) by chance. These correlations inevitably appear in large data archives representing complex systems such as climate models, which encompass a vast array of climate variables. As a result, if scientists keep searching for such relationships long enough, they will eventually find a few. In turn, for a high-dimensional and highly coupled climate system, those relationships will likely be at least partly explainable on the basis of actual scientific mechanisms operating in the system, whereas other correlations will occur entirely by chance. A natural focus on the best-performing of the resulting constraints, even if linked to plausible physical mechanisms, will likely overfit the relationships between past model performance and projected change, often even falling victim to coincidental correlations. This “data mining” criticism has been prominently made in previous publications (e.g. Caldwell et al., 2014; Sanderson et al., 2021; Williamson et al., 2021; Breul et al., 2023).

Several emergent constraints were found to weaken or even vanish when moving from CMIP3 to CMIP5 or from CMIP5 to CMIP6 (Caldwell et al., 2018; Pendergrass, 2020; Schlund et al., 2020; Williamson et al., 2021; Simpson et al., 2021; Thackeray et al., 2021), suggesting that the previously identified relationships were, indeed, likely to be overconfident or coincidental.

We suggest machine learning-guided controlling-factor analysis (CFA) as a promising alternative to establish more robust relationships tested to hold across climate states and climate model ensembles. CFAs establish functions that are only trained on data representative of the observational record but which are subsequently also tested for future responses, as can be evaluated across ensembles of future climate model projections. These functions therefore establish a direct link between the past and the future. This climate invariance can be evaluated across sets of climate models or even sets of CMIP ensembles, addressing limitation (1). The use of machine learning allows us to learn higher-dimensional, less simplifying relationships, addressing limitation (2). Finally, the design of the CFA functions will be motivated by known physical relationships between target variables to be constrained (the predictand) and environmental controlling factors (the predictors) which – together with the comprehensive out-of-sample testing – addresses limitation (3). The fact that the resulting functions can be validated under both past and future conditions enables an objective validation and uncertainty quantification and reduces the risk of falling victim to coincidental correlations.

Low-dimensional CFA frameworks have been popular in climate science for some time, especially in the context of constraining uncertainty on cloud feedback mechanisms (e.g. Klein et al., 2017) but also for understanding stratospheric water vapour variability (Smalley et al., 2017). Here, we focus on recent machine learning ideas to improve their performance for specific constraints on climate feedback mechanisms. We often found that CFAs are at first interpreted as a type of emergent constraint. In the following, we instead highlight key differences between the two frameworks, arguing for a separate treatment. We will illustrate central aspects by reviewing two recently published examples of constraining highly uncertain changes in Earth's cloud cover (Ceppi and Nowack, 2021) and in stratospheric water vapour (Nowack et al., 2023).

2.1 Framework definition

The central idea behind CFA for observational constraints is the training of a function f relating multiple large-scale environmental variables X to a target variable y over time t:

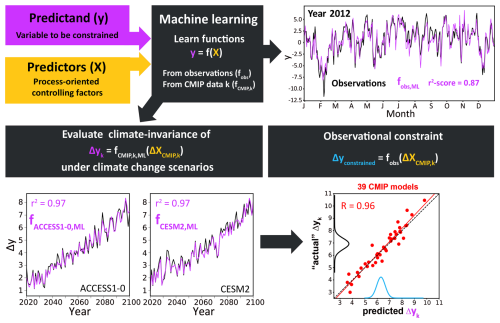

Figure 2Example workflow for a CFA with machine learning. First, the regression set-up is defined so that the predictand y can be modelled well on the basis of a set of controlling factors X. These functions are learned individually for observational datasets and climate model simulations under historical climate forcing conditions. Out-of-sample predictive skill is evaluated in each case based on held-out test data, illustrated here for a hypothetical test year, 2012, based on daily data. Next, it is tested if the relationships learned also hold under climate change scenarios (annually averaged for visualization purposes). This step is only possible for climate models, demonstrated here for two example SSP projections. The black lines mark the actual climate model responses; the violet lines mark the predictions if the functions are fed with the model-consistent changes in the controlling factors (which, if approximately climate-invariant relationships were indeed established, should replicate the actual responses). Imperfections in the machine learning predictions can be measured across an ensemble of climate models, e.g. from the CMIP ensembles, and can, as such, be incorporated into the overall uncertainty quantification. This is sketched in the bottom right for a set of 39 CMIP models (red dots), here showing 30-year averages of the predictions vs. true responses for the years 2070–2100. Finally, to obtain an observational constraint on model uncertainty in Δy (cf. inter-model spread along the y axis), the functions fobs are combined with the 39 different CMIP controlling-factor responses, leading to an observationally constrained distribution for the predicted responses Δyconstrained. The latter is shown (light-blue distribution) on the x axis in the bottom-right figure. This preliminary distribution is then combined with the prediction error (evident in the spread around the 1:1 line across the 39 CMIP models) to obtain a final observational constraint, indicated by the wider distribution (black) along the y axis.

Ultimately, we wish to constrain climate model uncertainty in projected changes in y given already observed relationships between X and y. A first major difference compared to emergent constraints is that the functions are trained only on historical data (observations or, for consistency, climate model simulations under historical forcing conditions). The parameters θ, which characterize the function f, can later be considered to be measures of the importance of the controlling-factor relationships found. In this data-driven framework, f (and, thus, θ) can be learned individually from sets of both observational (providing observational functions fobs,m) and climate model data (providing model-derived functions fCMIP,k).

The workflow of the CFA framework is illustrated in Fig. 2. Expert knowledge is pivotal when selecting the factors X (yellow box) that are thought to “control” y (violet box). However, in contrast to emergent constraints where similar arguments apply to select physically plausible constraints, the physical mechanisms suggested to link the predictors to the predictand can be far more granular in CFA. Distinct thermodynamic and dynamic phenomena driving variability in the predictand can be distinguished, e.g. linking cloud occurrence to a combination of large-scale patterns of sea surface temperatures, relative humidity, and atmospheric-stability measures (Wilson Kemsley et al., 2024). Returning to Fig. 2, machine learning (central grey box) is used to derive the strength of the relationships between the factors and y. The generalization skill of these functions trained on the historical data is easily validated based on independent test data. A good first test case is, again, already observed data or historical simulations (e.g. left-out years not used during training and cross-validation), especially of extreme historical events such as the 2015–2016 El Niño event (Wilson Kemsley et al., 2024; Ceppi et al., 2024). Of course, these test data are not used during training and cross-validation or the hyperparameter tuning (see longer discussions on these issues in Bishop, 2006; Nowack et al., 2021). In Fig. 2, an example is shown for a hypothetical observational test case for the year 2012 if data from that year were not used for training. We re-iterate that separate functions can be learned and then validated in such a fashion for both observational data (fobs,m) and simulations conducted with various climate models (typically, historical simulations run with different climate models, indexed by k, leading to functions fCMIP,k).

To clarify, emergent constraints in combination with machine learning frameworks have been suggested as well (e.g. Williamson et al., 2021). However, CFAs are different in two ways: firstly, emergent constraint functions learn from emergent behaviour across climate change responses of an entire model ensemble by correlating variables characterizing the models' past behaviour (e.g. a measure of internal variability) to the model-consistent future responses in a quantity of interest (e.g. the equilibrium climate sensitivity). CFAs instead learn from internal variability and use these relationships in a climate-invariant context to constrain the future response without the latter being involved in the fitting process. Secondly, because the sample size for the relationships learned is no longer limited by the number of models in the ensemble (as is the case for emergent constraints – typically in the range of around 10–60 CMIP models), the general setting is more suitable for the application of machine learning, which strongly depends on the availability of a sufficient number of training samples. The review examples below used monthly mean data. In principle, even much higher temporal resolutions could be used, e.g. up to daily extremes, which might open up new routes for constraining changes in specific extreme weather events (Wilkinson et al., 2023; Shao et al., 2024).

The next important step is to validate – across a representative climate model ensemble – that the functions learned based on historical data also perform well under climate change scenarios, i.e. if fCMIP,k can also skilfully predict the model-consistent climate change response (indicated by Δ) if provided with model-consistent changes in the controlling factors:

Note that, for most predictand and controlling-factor variables, this will pose an extrapolation step in relation to previously unobserved value ranges. As discussed in the Introduction, this extrapolation step under, for example, strong CO2 forcing poses particular challenges for non-linear ML techniques that one might want to apply to any given CFA. Similarly, it might limit the scope of applying CFA to non-linear observational constraint problems. We see various pathways to address these challenges in CFAs, some of which have not yet been explored in the CFA literature. We will discuss these in Sect. 3.

If the projections are reproduced well across the ensemble of climate models, this implies that the learned relationships are approximately climate-invariant, thus opening up a new link between historically observable relationships and the future climate response, at least to the degree that is currently represented in state-of-the-art climate models. This is exciting because it provides a more direct approach to constrain model uncertainty than emergent constraints are able to provide. In the end, one can simply obtain an observational calibration of each model's response by combining the observed function(s) fobs,m with each individual model response in the controlling factors:

Finally, since the machine learning predictions will not be perfect, the resulting distribution of observationally constrained climate model responses will need to be combined further with the method-intrinsic prediction error (see Fig. 2 and the explanation in its caption) to obtain a final observationally constrained distribution for Δy. Note that we also indexed the function fobs with the index “m” here. The index indicates that both Ceppi and Nowack (2021) and Nowack et al. (2023) trained a number of different observational functions to create the observationally constrained distribution for each model to sample and represent observational uncertainty in the relationships learned as well. For simplicity, we have dropped this index in Fig. 2.

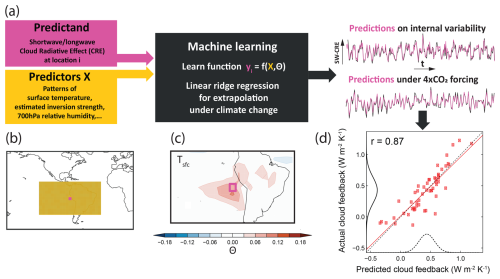

Figure 3Cloud example for a CFA with machine learning. The workflow broadly follows the logic outlined in Fig. 2. (a) Cloud radiative effects (CREs) are predicted at a given grid location as a function of a set of controlling factors. Linear machine learning approaches such as ridge regression are currently recommended due to the need to extrapolate when using the learned relationships for predictions under climate change scenarios. The functions for each grid point are first evaluated based on monthly mean data of historical simulations and observations and are then evaluated afterwards for climate models based on monthly predictions under 4 × CO2 forcing with model-consistent changes in the controlling factors. As a sketch, this is illustrated using multi-annual predictions of a single climate model for a grid point in the tropical Pacific (top right). For comprehensive evaluations of such functions based on historical data, see, for example, the study by Wilson Kemsley et al. (2024). As the sketch for the 4 × CO2 scenario extends over 150 years, the monthly predictions and ground truth were averaged to annual means for visualization purposes. (b) Example sketch of the regional context (yellow) of many grid points surrounding a target grid point (purple) for which the CREs are predicted. (c) Example map of CMIP multi-model-mean ridge regression parameters θ for one of the controlling factors – surface temperature – when predicting shortwave CRE. In (d), the final constraint on the global cloud feedback is illustrated: using the monthly climate-model-specific predictions under 4 × CO2, these are subsequently annually averaged to calculate cloud feedback parameters from Gregory-type regressions (Gregory et al., 2004; Andrews et al., 2010) of top-of-the-atmosphere CRE anomalies against global mean surface temperature change. These feedback parameters (which are the linear regression slopes of these fits) are obtained separately for the ridge regression predictions and the actual 4 × CO2 simulations for each model. Afterwards, we compare the ridge-predicted CRE feedback parameters with those derived from the actual abrupt-4 × CO2 climate model simulations across the entire model ensemble. For the plot shown, we first integrated the contributions to the global shortwave and longwave CRE feedback parameter contributions across all grid points before combining the longwave and shortwave components into an overall global cloud feedback parameter. Plots for the components can be found in Ceppi and Nowack (2021) and their Supplement. Across 52 CMIP models, a strong relationship (r=0.87) is obtained. Following the combination of functions and controlling-factor responses as outlined in Fig. 2, four different observationally derived functions resulted in observationally constrained projections, shown as the uncertainty distribution along the x axis (dashed line). This distribution is combined with the methodological uncertainty to provide a final observational constraint distribution for the global cloud feedback shown along the y axis (solid line).

2.2 Taking a step back

Before we discuss the two specific applications of the machine-learning-based CFA framework, it is important to point out two built-in assumptions with regard to the nature of the resulting observational constraints:

-

By compartmentalizing the prediction of y into two contributors in the form of parameters θ and controlling factors X, the constraint will be based on the observed θobs. However, current versions of CFA do not address uncertainty in the controlling-factor responses across the climate model ensemble, which essentially remains untouched.

-

The CFA observational constraints are therefore conceptually closest to emergent constraints in the sense that the choice of controlling factors will be crucial for finding a constraint. However, as already mentioned above, these choices require a far smaller leap of faith in linking the predictand response to thermodynamic and dynamic mechanisms. Still, if the resulting sensitivities θ for the controlling factors are not actually uncertain, there will be no constraint. For emergent constraints, this situation is akin to cases where there would be no spread along the x axis for the observable quantity across the models. A key difference is that one first identifies process-oriented relationships between X and y in climate model data and observations, representing internal climate variability (and, possibly, historical trends), instead of directly targeting quantities that have a large spread across the model ensemble for both the predictors and the long-term response.

2.3 Application I: cloud-controlling-factor analysis

Changes in cloud properties (amount, optical depth, altitude) are the leading uncertainty factor in global warming projections under increasing atmospheric CO2 (Ceppi et al., 2017; Sherwood et al., 2020; Zelinka et al., 2020). A driving force behind this uncertainty is the still relatively coarse spatial resolution of global models, meaning that processes involved in cloud formation have to be parameterized instead of being explicitly resolved. Improvements to parameterizations relying on machine learning ideas have been suggested elsewhere (e.g. Schneider et al., 2017) and will not be discussed further here. Instead, as a first example, we will focus on CFA as an alternative viewpoint to constrain uncertainty in global cloud feedback mechanisms. As such, CFA attempts to find constraining relationships at larger spatial scales, similarly to – but, as outlined above, in important points differently to – emergent constraints. CFAs have already been used extensively to constrain uncertainty related to specific cloud feedback types, though this has primarily been done with low-dimensional multiple linear regression approaches including <10 controlling factors. A few CFA studies used non-linear machine learning methods as well, but to understand historical cloud variations rather than to derive observational constraints on future projections (e.g. Andersen et al., 2017, 2022; Fuchs et al., 2018).

Previous observational constraint studies with lower-dimensional multiple linear regression, mostly focused on regionally confined major low-cloud decks (e.g. Qu et al., 2015; Zhou et al., 2015; Myers and Norris, 2016; McCoy et al., 2017; Scott et al., 2020; Cesana and Genio, 2021; Myers et al., 2021) because changes in their cumulative shortwave reflectivity contribute a large fraction to the overall uncertainty in global cloud feedback (Sherwood et al., 2020). Building on this work, Ceppi and Nowack (2021) developed a statistical learning analysis using ridge regression (Hoerl and Kennard, 1970) as a linear form of machine learning. This new approach to CFA allowed them to improve on previous CFA constraints and to expand the scope beyond the low-cloud decks to the global scale for both shortwave (clouds are reflective, thus cooling climate) and longwave (clouds can trap terrestrial radiation, thus warming climate) cloud radiative effects. Here, we will briefly review these results as an example of how CFA can be developed to constrain model uncertainty more effectively by including machine learning ideas. A sketch of the framework is shown in Fig. 3.

As in previous lower-dimensional CFA for clouds, Ceppi and Nowack (2021) focused on a relatively short, well-observed period during the satellite era. In their set-up, this translates into a regression approach in which cloud-radiative anomalies at grid point r, dC(r,t), are approximated as a linear function of anomalies in a set of M meteorological cloud-controlling factors dXi(r,t):

where the parameters θi(r) represent the learned sensitivities of C(r,t) to the controlling factors. Here, C(r,t) could, in principle, be different types of measures to characterize cloud contributions to shorter-term variations (here, monthly) and long-term changes (including the climate change response) in Earth's energy budget. Ceppi and Nowack (2021) separated shortwave from longwave cloud radiative effects, and further common decompositions include high-cloud and low-cloud contributions, as well as changes in cloud fractions, cloud top pressure, and cloud optical depth (Wilson Kemsley et al., 2024; Ceppi et al., 2024). As a key difference in relation to previous studies, which focused on grid-point-wise relationships, e.g. between surface temperature at point r and C(r,t), Ceppi and Nowack (2021) regressed cloud radiative anomalies at grid point r as a function of the controlling factors within a 105° × 55° (long × lat) gridded domain centred on r (Fig. 3b, c), rendering the regression high-dimensional. The contribution of each controlling factor to dC(r,t) is then obtained by the scalar product of the spatial vectors θi(r) and dXi(r,t).

An important choice is the set of controlling factors. Heuristics that motivate various predictors for low-cloud decks can be found in Klein et al. (2017), and those for high clouds can be found in Wilson Kemsley et al. (2024). In Ceppi and Nowack (2021), the authors used five different patterns of cloud-controlling factors, which were used to train the predictions on historical data. However, for an effective constraint on the cloud feedback under abrupt-4 × CO2 forcing across CMIP5 and CMIP6 models, they only considered two factors that drive the main part of the climate change response (rather than variability), at least when averaged globally. These were patterns of surface temperature (the most important factor) and of the estimated inversion strength (EIS, an important modulating factor, though a different stability measure was used over land). Overall, the study demonstrated that the use of machine learning ideas opens the door to consider a larger spatial context, which improves the CFA function in terms of its predictions and, eventually, also improves the overall observational constraint (Fig. 3d). This further allowed for the extension of CFA frameworks of cloud feedback mechanisms from specific low-cloud analyses to the global scale and to new cloud types (in particular, high clouds; see Wilson Kemsley et al., 2024).

2.4 Application II: an observational constraint on the stratospheric-water-vapour feedback

The linearity assumption appears to work well to the first order for global cloud feedback, but this is not guaranteed for many other uncertain Earth system feedbacks. A first counter-example can be found in Nowack et al. (2023), who adapted the framework presented in Ceppi and Nowack (2021) to constrain uncertainty in changes in specific humidity across the stratosphere. This stratospheric-water-vapour feedback is, indeed, highly uncertain in CMIP models, with model responses ranging from virtually no response to more than a tripling of concentrations relative to present-day values in 4 × CO2 simulations. This, in turn, makes significant contributions to uncertainties in projections of global warming (Stuber et al., 2005; Joshi et al., 2010; Dietmüller et al., 2014; Nowack et al., 2015, 2018b; Keeble et al., 2021), the tropospheric-circulation response (Joshi et al., 2006; Charlesworth et al., 2023), and the recovery of the ozone layer (Dvortsov and Solomon, 2001; Stenke and Grewe, 2005).

To address this uncertainty, Nowack et al. (2023) defined a CFA using ridge regression (Hoerl and Kennard, 1970), in which they predicted monthly mean water vapour concentrations in the tropical lower stratosphere (qstrat) as a function of temperature variations in the upper troposphere and lower stratosphere (UTLS). Their analysis was directly motivated by the strong mechanistic link between tropical UTLS temperature and water vapour entry rates; see, for example, Fueglistaler et al. (2005, 2009). Their final controlling-factor function was defined as follows:

which takes into standard-scaled account temperature anomalies dT across a whole longitude–latitude–altitude cube of the tropical to mid-latitude UTLS region over τmax monthly time lags. Using this function, both internal variability in qstrat (for observations and CMIP models) and the long-term climate change response (CMIP models) could be predicted well.

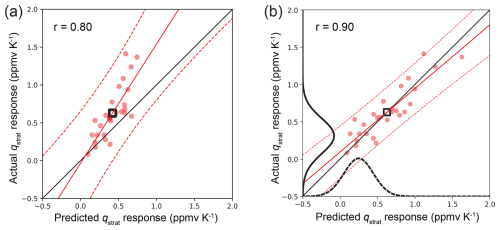

However, under abrupt-4 × CO2 forcing, the function notably only held true after log-transforming the predictand before training, which apparently led to a quasi-linearization of the relationships to be learned (Fig. 4). The need for such a transformation is not unexpected due to the known approximately exponential relationship between temperature and saturation water vapour concentrations and simply underlines that similar CFAs could be designed for many other uncertain Earth system feedbacks, even if non-linear, if appropriate physics-informed transformations can be applied.

Figure 4Constraint on stratospheric-water-vapour projections requiring a non-linear transformation. (a) Linear ridge regression without transformation of the predictand. (b) After log-transforming the predictand before training on historical data. Without the log-transformation, the predictions for large changes increasingly underestimate the actual responses in the corresponding abrupt-4 × CO2 simulations, and the scatter in the predictions also increases (lowering r). With the transformation, the predicted water vapour responses agree well with the actual simulated responses (provided in parts per million volume (ppmv), normalized by model-consistent global mean surface temperature change to convert the change into a feedback). The final observational constraint is calculated similarly to the cloud example; for further details, see Nowack et al. (2023). The dashed red lines mark the prediction intervals, whereas the solid red lines show linear regressions fitted to the data (Wilks, 2006).

3.1 Dealing with non-linearities

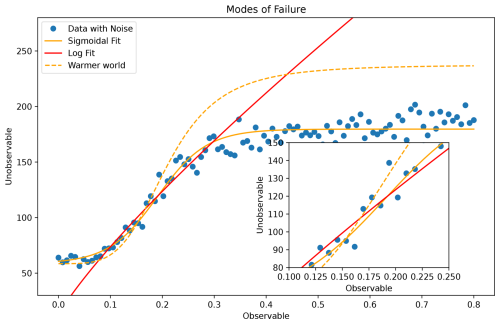

As already implied by the stratospheric-water-vapour example, not all relationships we wish to constrain will be linear. For example, while not typically considered in the emergent constraint literature, the aerosol effective radiative forcing (ERF) is defined with reference to an un-observed pre-industrial atmospheric state and so faces many of the same challenges described above (see also Fig. 5). Since the relationships between aerosol emissions and cloud properties and between cloud properties and radiative forcing are known to be non-linear (Carslaw et al., 2013a), extrapolating from observed to unobserved climate states, while necessary, is fraught with danger.

Besides the obvious risk that, if we naively attempted to fit non-linear functions to such relationships, we could easily over-fit our data, Fig. 5 shows the opposite risk in that assuming the non-linearities to be small based on the observed data (inset) could lead us to under-fitting the response over larger ranges. If at all possible, we should look to collect observations in these outlying regions, perhaps looking at particularly clean atmospheric conditions in the case of aerosol (Carslaw et al., 2013a; Gryspeerdt et al., 2023).

Looking beyond emergent constraints and towards the CFA framework discussed in Sect. 2, we further highlight four strategies to address the extrapolation challenge in non-linear contexts. In our opinion, these strategies have not yet been exploited sufficiently in the existing literature and could be promising pathways for future work:

-

Linearizations and quasi-linearizations. In the stratospheric-water-vapour example, we demonstrated how linearizing relationships can help tackle non-linear observational-constraint challenges. In particular, prior physical knowledge – such as the approximately exponential relationship between temperature and specific humidity – can be used to transform the regression problem towards a more linear behaviour, thus facilitating extrapolation.

-

Climate-invariant data transformations. Another promising route could be to pursue ideas similar to variable transformations recently suggested for climate model parameterizations (Beucler et al., 2024). In essence, variables that require extrapolation in warmer climates could be transformed into substitute variables whose distribution ranges are approximately climate-invariant, for example, because they cannot (or hardly ever) cross certain physical thresholds (e.g. relative humidity which can vary only between 0 % and – mostly – 100 %). Such ideas are not discussed in detail here; we rather refer the reader to Beucler et al. (2024).

-

Moving non-linear contributions to the controlling-factor responses. CFAs aim to observationally constrain the parameters θ that characterize the dependence of the predictand on the controlling factors. The controlling-factor responses, however, are not constrained and can, of course, behave non-linearly. In a linear CFA framework, this description would be comparable to a linear function that depends on polynomial or logarithmic terms; one can still constrain the linear model parameters in that case. This idea is not distinct from the point on quasi-linearizations but helps to underline the difference in approaches with regard to whether the predictand or the predictor(s) are transformed to obtain an approximately linear model.

-

Non-linear methods incorporating prior physical knowledge to constrain the solution space. In Sect. 4, we will discuss ideas on how non-linear machine learning methods could indeed be applied to CFA frameworks. For example, this concerns Gaussian processes with appropriate choices of priors or with the combination of linear and non-linear kernels to model both linear and non-linear variations in the predictand simultaneously. In addition, physics-informed machine learning approaches (Karniadakis et al., 2021) could help to define saturation regimes in machine learning functions, particularly through, but not limited to, modifications to their cost functions.

Figure 5A schematic diagram of a typical emergent constraint showing the relationship between an unobserved quantity (Y; say effective radiative forcing (ERF)) and an observed quantity (X). This holds well over a limited region of X (inset). This relationship may fail to hold outside the observed region though, particularly if the response is (or becomes) non-linear. This relationship can also breakdown if a (possibly) unobserved variable Z affects both X and Y, causing a confounding that changes the relationship in, for example, a warmer world (or the past).

3.2 Confounding

Confounding occurs when an extraneous variable influences both the dependent variable and an independent variable, leading to a spurious association. This is particularly challenging in climate science, where numerous interacting processes can lead to complex relationships between variables. For instance, in the context of Fig. 5, the apparent influence of an observed variable on an unobserved variable may actually be mediated or obscured by another uncontrolled variable, such as temperature. This confounding can severely compromise the identification and validation of emergent constraints or controlling-factor relationships. Machine learning methods, though powerful in detecting patterns, are not inherently equipped to distinguish causal relationships from mere correlations unless specifically designed to do so. A possibility to address this challenge through causal discovery methods will be discussed in Sect. 4.2.

3.3 Blind spots in climate model ensembles

Clearly, any observational constraint approach that requires climate models to validate the mathematical model used to constrain the future response is potentially affected by blind spots in the ensemble. For example, blind spots could be potentially missing physical mechanisms across all models as implied in, for example, Kang et al. (2023) for Southern Hemisphere sea surface temperature changes. This limitation, however, applies in similar ways to all types of approaches discussed here, including classic statistical climate model evaluation, emergent constraints, and CFA. For CFA, this affects the evaluation of the climate-invariance property of the relationships found if they are to be evaluated well beyond historical climate forcing levels.

Still, a well-chosen set of proxy variables as predictors for CFA can, to some extent, help to buffer against such effects. In the stratospheric-water-vapour example, the authors focused on the CO2-driven climate feedback. As it stands, such an approach brackets out other potential mechanisms for future changes in stratospheric water vapour through chemical mechanisms related to methane (Nowack et al., 2023) or to changes in the background stratospheric aerosol loading (Kroll and Schmidt, 2024; Marshall et al., 2024). However, the monthly mean temperature variations around the tropopause will naturally integrate multiple mechanisms contributing to water vapour variability, some of which the authors did not explicitly think of during their framework design. Notably, the same variations will never truly reflect the most intuitive mechanism of the immediate dehydration of air parcels during their ascent from the troposphere into the stratosphere. The latter would require a Lagrangian perspective and much higher temporal and spatial resolutions in the data the CFA is applied to. At the same time, other processes potentially contributing to water vapour variations, such as convective overshooting, radiation–circulation interactions, or cirrus clouds (Dessler et al., 2016; Ming et al., 2016), will likely already have an effect in the present day and would thus be part of the observationally derived parameters in the constraint functions (i.e. lowering or increasing the observationally derived sensitivities).

Having said that, what always remains uncertain in CFA is whether the distribution of controlling-factor changes in the ensemble of climate models truly encapsulates their future true response to CO2 forcing. If not, constraining functions learned from past data might provide a different constraint on the future feedback if combined with a set of controlling-factor responses hypothesized to better represent suggested blind-spot mechanisms. In any case, such tests could be valuable to explore the implications of potential climate model blind spots for the robustness of observational constraints. Specific simulations with a supposedly more mechanistically complete model or simulations subject to larger ranges of values for uncertain climate model parameters (see also perturbed physics simulations discussed in Sect. 4.3) could be useful starting points in this regard. Tests along these lines could provide valuable insights with respect to the sensitivity of CFA observational constraints to varying the assumptions inherent in state-of-the-art climate models.

In Sect. 3, we highlighted several challenges in the application of machine learning to observational constraints on state-of-the-art climate model ensembles. With careful consideration of these challenges, however, machine learning has the potential to be a powerful tool to learn more sophisticated, objective (emergent) constraints that can be validated through cross-validation and perfect model tests. On top of the machine-learning-augmented CFA outlined in Sect. 2, here, we highlight a few more ways in which machine learning can be used to find and improve the robustness of observational constraints.

4.1 Physical priors

In many cases, we already have a reasonable approximation of the functional form of a physical response but would like to capture uncertain elements, such as free parameters or closures, in a consistent and transparent way. In the stratospheric-water-vapour example above, this was the known non-linear relationship between temperature and saturation water vapour. In Bayesian terms, we already have an informative prior. As such, using a Bayesian approach can be a powerful way of encoding this information and updating it with observations to provide predictions with well-calibrated uncertainties.

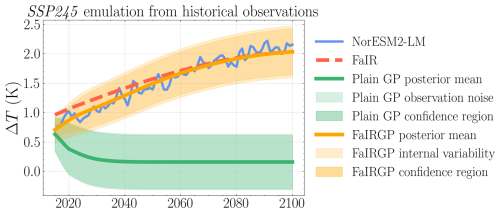

Figure 6Example of using a Bayesian model with a physical prior to enable accurate and well-calibrated extrapolation of climate projections. Both the FaIR model and the FaiRGP model (which encodes the FaIR response in the covariance function) accurately reproduce the NorESM2 warming under SSP2-4.5 despite having only seen historical temperatures. The plain GP has a no physical regularization and quickly reverts to its mean function.

One recent example of this utilizes the functional form of a simple energy balance model (FaIR, in this case; Leach et al., 2021) as a prior for a Gaussian process (GP) emulation of the temperature response to a given forcing (Bouabid et al., 2023). By constructing the statistical (machine learning) model to respect the physical form of the response, it is able to better predict future warming. Importantly, for this discussion, this approach performs significantly better than an unconstrained GP when making out-of-sample predictions (extrapolating). For example, by training both GPs only on outputs from a global climate model (GCM) representing the historical period, the physical GP is able to accurately predict future warming under SSP2-4.5, while the plain GP quickly reverts to its mean function. This behaviour is not confined to GPs; any highly parameterized regression technique (such as a neural network) would produce spurious results without the strong regularization that the physical form provides. Similarly, physical constraints imposed on machine learning cost functions, as is the case in physics-informed machine learning (Chen et al., 2021; Karniadakis et al., 2021; Kashinath et al., 2021), could be powerful tools to be used in this context.

4.2 Discovering controlling factors

Causal discovery and inference techniques allow us to robustly detect potential constraints and to address the challenge of confounding variables, respectively (Runge et al., 2019; Camps-Valls et al., 2023). Methods such as causal discovery or the use of instrumental variables could help in distinguishing true climate signals from confounding noise. Furthermore, enhancing the datasets with more comprehensive metadata that capture potential confounders and applying robust statistical techniques to explicitly model these confounders can aid in mitigating their effects. Such approaches would strengthen the reliability of machine-learning-driven analyses, ensuring that the emergent constraints or CFAs reflect more accurate and physically plausible relationships that hold under various climate change scenarios. An interesting analogy is that with CFA from Sect. 2; significant confounding, which might change the detected historical relationships under climate change, should also lead to a corresponding decrease in the predictive skill of the climate change response under, for example, 4 × CO2 forcing. As such, poorly performing CFA extrapolations might be a good indicator of poorly designed causal (proxy) relationships among the controlling factors and the predictand.

4.3 Perturbed parameter ensembles

Perturbed physics ensembles (PPEs) (Murphy et al., 2004; Mulholland et al., 2017) present a significant opportunity in the realm of CFA by allowing researchers to systematically explore the sensitivity of climate models to changes in physical parameterizations. By adjusting various parameters within a climate model, PPEs generate a range of plausible climate outcomes, which can then be analysed to understand how specific processes impact model outputs. This systematic variation of parameters helps isolate the influence of individual factors, thereby providing deeper insights into the workings of climate models than is possible by simply comparing a small ensemble of qualitatively different models.

The utility of PPEs extends beyond the internal processes of models to potentially enhance our understanding of real-world observations. By identifying which parameters and model configurations yield the best alignment with observed climate data, researchers can infer which physical processes might be driving observed changes in the climate system. This transfer of learning from models to learning from observations is crucial for improving the robustness and credibility of climate projections. Moreover, the knowledge gained through PPEs can guide the development of more refined machine learning algorithms that are capable of incorporating complex, non-linear interactions discovered in observations. Thus, combined with the causal discovery approaches outlined above, PPEs not only enrich our understanding of climate models but can also serve as a resource for informing robust (physical) CFAs.

While all climate change studies with machine learning necessarily face the challenge of extrapolation in the presence of (potential) non-linearity, there are clearly opportunities and methods to make the power of machine learning accessible to the scientific challenge. Here, we took the perspective of how machine learning can help us provide better observational constraints on the still substantial uncertainties in climate model projections. In particular, we highlighted controlling-factor analyses (CFAs) combined with machine learning as a promising route to pursue and contrasted this approach to emergent constraints. On the one hand, emergent constraints share common ground with CFAs in that they still require expert knowledge in the choice of predictors and in that they require a leap of faith in the whole ensemble of state-of-the-art climate models. On the other hand, CFAs learn functions that a provide a more direct link between the past and future response, reduce oversimplification through the learning of more complex functional relationships, and allow for a more comprehensive out-of-sample validation of the predictive skill regarding both past (climate models and observations) and future data (models only). As such, CFAs are arguably also less prone to the risk of data-mining correlations that are justified a posteriori on the basis of physical plausibility arguments.

Ultimately, CFAs might also help to validate proposed emergent constraints in the future. In essence, for this to happen, one would have to set up an effective CFA targeting the same uncertain predictand. Existing emergent constraints could, thus, in many ways, be considered to be useful starting points for this new field in the spirit of working towards “multiple lines of evidence”. We further provided a wider perspective on the challenges of using machine learning for observational and, specifically, emergent constraints, such as non-linearity and confounding. Key opportunities to address these challenges can be found in physics-informed data transformations, physics-informed machine learning, causal algorithms, perturbed physics ensembles, and the imposition of physical knowledge through physical priors in Bayesian methods.

While we refrain from over-explaining our intentionally philosophical paper title, it is clear that emergent constraints tend to be low-dimensional and somewhat simplistic. Consequently, they will necessarily be various degrees of “wrong”, as are all models of the truly complex real world. As such, they have commonalities with the climate models they are derived from. Nonetheless, emergent constraints, along with other statistical evaluation methods, are essential because raw model ensembles alone would only offer limited insight when it comes to Earth's uncertain future. Emergent constraints have effectively motivated research into poorly understood climate processes, contributing to scientific understanding and inspiring further model development. They will remain valuable tools for the climate science community for the foreseeable future. In this paper, we propose that CFAs – a conceptually related yet distinct approach – could play an important role not only in validating and complementing but also even in moving beyond the current evidence provided by emergent constraints.

Finally, we underline an analogy between the development of machine learning and climate models. This analogy, in turn, could motivate adjustments to frameworks for climate model development and evaluation cycles. Specifically, in the context of training machine learning models, the process bears some similarities to the tuning of climate models in relation to historical observations (e.g. Mauritsen et al., 2012; Hourdin et al., 2017). As a result, one might argue that model intercomparisons, weightings, and evaluations against those same data are far less meaningful, similarly to how one should not evaluate machine learning models against their training data (a good fit could simply – as in most cases – imply overfitting rather than good, generalizable predictive skill). Of course, there are intrinsically regularizing features in the form of physical laws in any physics-based modelling system, which will somewhat mitigate such effects as compared to fitting a neural network without physical constraints. Still, we see scope for defining dedicated historical test datasets as part of future model intercomparison exercises. These test datasets should not be included during climate model tuning. For example, one could agree that all model tuning should stop by the year 2005 (typically the last year of historical simulations for CMIP5), which would leave around 2 decades for objective model evaluation of recent trends and variability. Through continued scientific exchanges of ideas of this kind, there will be many different ways for the disciplines of machine learning and climate science to learn from one another.

This is an opinion article reflecting on the state of the art. All visualizations are based off existing publications and datasets. We refer to the original publications for the corresponding code availability.

All data used are publicly available as part of the CMIP6 (https://esgf-index1.ceda.ac.uk/search/cmip6-ceda/, Eyring et al., 2016) and CRU (https://crudata.uea.ac.uk/cru/data/hrg/, Harris et al., 2020) data archives.

Both authors co-designed and co-wrote the paper. For the initial draft, PN focused on Sects. 1 and 2, and DWP focused on Sects. 3 and 4, with later additions by PN to address reviewer comments.

At least one of the (co-)authors is a member of the editorial board of Atmospheric Chemistry and Physics. The peer-review process was guided by an independent editor, and the authors also have no other competing interests to declare.

Publisher’s note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

This article is part of the special issue “20 years of Atmospheric Chemistry and Physics”. It is not associated with a conference.

The authors thank Paulo Ceppi (Imperial College London) for the helpful comments. We acknowledge the World Climate Research Programme, which, through its Working Group on Coupled Modelling, coordinated and promoted CMIP6. We thank the climate modelling groups for producing and making available their model output, the Earth System Grid Federation (ESGF) for archiving the data and providing access, and the multiple funding agencies who support CMIP6 and ESGF.

This research has been supported by the Natural Environment Research Council (grant no. NE/V012045/1).

The article processing charges for this open-access publication were covered by the Karlsruhe Institute of Technology (KIT).

This paper was edited by Timothy Garrett and Ken Carslaw and reviewed by two anonymous referees.

Abramowitz, G. and Bishop, C. H.: Climate model dependence and the ensemble dependence transformation of CMIP projections, J. Climate, 28, 2332–2348, https://doi.org/10.1175/JCLI-D-14-00364.1, 2015. a

Abramowitz, G., Herger, N., Gutmann, E., Hammerling, D., Knutti, R., Leduc, M., Lorenz, R., Pincus, R., and Schmidt, G. A.: ESD Reviews: Model dependence in multi-model climate ensembles: weighting, sub-selection and out-of-sample testing, Earth Syst. Dynam., 10, 91–105, https://doi.org/10.5194/esd-10-91-2019, 2019. a

Allen, M. R. and Ingram, W. J.: Constraints on future changes in climate and the hydrologic cycle, Nature, 419, 224–232, https://doi.org/10.1038/nature01092, 2002. a

Andersen, H., Cermak, J., Fuchs, J., Knutti, R., and Lohmann, U.: Understanding the drivers of marine liquid-water cloud occurrence and properties with global observations using neural networks, Atmos. Chem. Phys., 17, 9535–9546, https://doi.org/10.5194/acp-17-9535-2017, 2017. a

Andersen, H., Cermak, J., Zipfel, L., and Myers, T. A.: Attribution of Observed Recent Decrease in Low Clouds Over the Northeastern Pacific to Cloud-Controlling Factors, Geophys. Res. Lett., 49, 1–10, https://doi.org/10.1029/2021gl096498, 2022. a

Andrews, T., Forster, P. M., Boucher, O., Bellouin, N., and Jones, A.: Precipitation, radiative forcing and global temperature change, Geophys. Res. Lett., 37, L14701, https://doi.org/10.1029/2010GL043991, 2010. a

Bellouin, N., Quaas, J., Gryspeerdt, E., Kinne, S., Stier, P., Watson-Parris, D., Boucher, O., Carslaw, K. S., Christensen, M., Daniau, A. L., Dufresne, J. L., Feingold, G., Fiedler, S., Forster, P., Gettelman, A., Haywood, J. M., Lohmann, U., Malavelle, F., Mauritsen, T., McCoy, D. T., Myhre, G., Mülmenstädt, J., Neubauer, D., Possner, A., Rugenstein, M., Sato, Y., Schulz, M., Schwartz, S. E., Sourdeval, O., Storelvmo, T., Toll, V., Winker, D., and Stevens, B.: Bounding Global Aerosol Radiative Forcing of Climate Change, Rev. Geophys., 58, e2019RG000660, https://doi.org/10.1029/2019RG000660, 2020. a

Beucler, T., Pritchard, M., Gentine, P., and Rasp, S.: Towards physically-consistent, data-driven models of convection, IEEE Xplore, 3987–3990 pp., https://doi.org/10.1109/IGARSS39084.2020.9324569, 2020. a

Beucler, T., Gentine, P., Yuval, J., Gupta, A., Peng, L., Lin, J., Yu, S., Rasp, S., Ahmed, F., O'gorman, P. A., Neelin, J. D., Lutsko, N. J., and Pritchard, M.: Climate-invariant machine learning, Sci. Adv., 10, eadj7250, https://doi.org/10.1126/sciadv.adj7250, 2024. a, b

Bi, K., Xie, L., Zhang, H., Chen, X., Gu, X., and Tian, Q.: Accurate medium-range global weather forecasting with 3D neural networks, Nature, 619, 533–538, https://doi.org/10.1038/s41586-023-06185-3, 2023. a

Bishop, C. H. and Abramowitz, G.: Climate model dependence and the replicate Earth paradigm, Clim. Dynam., 41, 885–900, https://doi.org/10.1007/s00382-012-1610-y, 2013. a

Bishop, C. M.: Pattern recognition and machine learning, Springer Science+Business Media, ISBN 978-0387-31073-2, 2006. a

Bouabid, S., Sejdinovic, D., and Watson-Parris, D.: FaIRGP: A Bayesian Energy Balance Model for Surface Temperatures Emulation, arXiv, 1–64 pp., http://arxiv.org/abs/2307.10052 (last access: 10 March 2024), 2023. a

Bouallègue, Z. B., Weyn, J. A., Clare, M. C. A., Dramsch, J., Dueben, P., and Chantry, M.: Improving Medium-Range Ensemble Weather Forecasts with Hierarchical Ensemble Transformers, Art. Intell. Earth Syst., 3, e230027, https://doi.org/10.1175/aies-d-23-0027.1, 2024. a

Bracegirdle, T. J. and Stephenson, D. B.: On the robustness of emergent constraints used in multimodel climate change projections of arctic warming, J. Climate, 26, 669–678, https://doi.org/10.1175/JCLI-D-12-00537.1, 2013. a

Bretherton, C. S. and Caldwell, P. M.: Combining emergent constraints for climate sensitivity, J. Climate, 33, 7413–7430, https://doi.org/10.1175/JCLI-D-19-0911.1, 2020. a

Breul, P., Ceppi, P., and Shepherd, T. G.: Revisiting the wintertime emergent constraint of the southern hemispheric midlatitude jet response to global warming, Weather Clim. Dynam., 4, 39–47, https://doi.org/10.5194/wcd-4-39-2023, 2023. a

Brient, F. and Schneider, T.: Constraints on climate sensitivity from space-based measurements of low-cloud reflection, J. Climate, 29, 5821–5835, https://doi.org/10.1175/JCLI-D-15-0897.1, 2016. a

Brunner, L., McSweeney, C., Ballinger, A. P., Befort, D. J., Benassi, M., Booth, B., Coppola, E., Vries, H. D., Harris, G., Hegerl, G. C., Knutti, R., Lenderink, G., Lowe, J., Nogherotto, R., O'Reilly, C., Qasmi, S., Ribes, A., Stocchi, P., and Undorf, S.: Comparing Methods to Constrain Future European Climate Projections Using a Consistent Framework, J. Climate, 33, 8671–8692, https://doi.org/10.1175/JCLI-D-19-0953.1, 2020a. a, b

Brunner, L., Pendergrass, A. G., Lehner, F., Merrifield, A. L., Lorenz, R., and Knutti, R.: Reduced global warming from CMIP6 projections when weighting models by performance and independence, Earth Syst. Dynam., 11, 995–1012, https://doi.org/10.5194/esd-11-995-2020, 2020b. a

Caldwell, P. M., Bretherton, C. S., Zelinka, M. D., Klein, S. A., Santer, B. D., and Sanderson, B. M.: Statistical significance of climate sensitivity predictors obtained by data mining, Geophys. Res. Lett., 41, 1803–1808, https://doi.org/10.1002/2014gl059205, 2014. a

Caldwell, P. M., Zelinka, M. D., and Klein, S. A.: Evaluating Emergent Constraints on Equilibrium Climate Sensitivity, J. Climate, 31, 3921–3942, https://doi.org/10.1175/JCLI-D-17-0631.1, 2018. a, b, c

Camps-Valls, G., Gerhardus, A., Ninad, U., Varando, G., Martius, G., Balaguer-Ballester, E., Vinuesa, R., Diaz, E., Zanna, L., and Runge, J.: Discovering causal relations and equations from data, Vol. 1044, 1–68 pp., https://doi.org/10.1016/j.physrep.2023.10.005, 2023. a

Carslaw, K. S., Lee, L. A., Reddington, C. L., Mann, G. W., and Pringle, K. J.: The magnitude and sources of uncertainty in global aerosol, Faraday Discussions, 165, 495, https://doi.org/10.1039/c3fd00043e, 2013a. a, b, c

Carslaw, K. S., Lee, L. A., Reddington, C. L., Pringle, K. J., Rap, A., Forster, P. M., Mann, G. W., Spracklen, D. V., Woodhouse, M. T., Regayre, L. A., and Pierce, J. R.: Large contribution of natural aerosols to uncertainty in indirect forcing, Nature, 503, 67–71, https://doi.org/10.1038/nature12674, 2013b. a

Ceppi, P. and Nowack, P.: Observational evidence that cloud feedback amplifies global warming, P. Natl. Acad. Sci. USA, 118, e2026290118, https://doi.org/10.1073/pnas.2026290118, 2021. a, b, c, d, e, f, g, h, i, j

Ceppi, P., Brient, F., Zelinka, M., and Hartmann, D. L.: Cloud feedback mechanisms and their representation in global climate models, Wiley Interdisciplinary Reviews: Climate Change, 8, 1–21, https://doi.org/10.1002/wcc.465, 2017. a

Ceppi, P., Myers, T. A., Nowack, P., Wall, C. J., and Zelinka, M. D.: Implications of a Pervasive Climate Model Bias for Low-Cloud Feedback, Geophys. Res. Lett., 51, e2024GL110525, https://doi.org/10.1029/2024GL110525, 2024. a, b

Cesana, G. V. and Genio, A. D. D.: Observational constraint on cloud feedbacks suggests moderate climate sensitivity, Nat. Clim. Change, 11, 213–220, https://doi.org/10.1038/s41558-020-00970-y, 2021. a

Chadburn, S. E., Burke, E. J., Cox, P. M., Friedlingstein, P., Hugelius, G., and Westermann, S.: An observation-based constraint on permafrost loss as a function of global warming, Nat. Clim. Change, 7, 340–344, https://doi.org/10.1038/nclimate3262, 2017. a

Charlesworth, E., Plüger, F., Birner, T., Baikhadzhaev, R., Abalos, M., Abraham, N. L., Akiyoshi, H., Bekki, S., Dennison, F., Jäckel, P., Keeble, J., Kinnison, D., Morgenstern, O., Plummer, D., Rozanov, E., Strode, S., Zeng, G., Egorova, T., and Riese, M.: Stratospheric water vapor affecting atmospheric circulation, Nat. Commun., 14, 3925, https://doi.org/10.1038/s41467-023-39559-2, 2023. a

Chen, Z., Liu, Y., and Sun, H.: Physics-informed learning of governing equations from scarce data, Nat. Commun., 12, 6136, https://doi.org/10.1038/s41467-021-26434-1, 2021. a

Chen, Z., Zhou, T., Chen, X., Zhang, W., Zhang, L., Wu, M., and Zou, L.: Observationally constrained projection of Afro-Asian monsoon precipitation, Nat. Commun., 13, 2552, https://doi.org/10.1038/s41467-022-30106-z, 2022. a

Cox, P. M.: Emergent Constraints on Climate-Carbon Cycle Feedbacks, Curr. Clim. Change Rep., 5, 275–281, https://doi.org/10.1007/s40641-019-00141-y, 2019. a, b, c

Cox, P. M., Pearson, D., Booth, B. B., Friedlingstein, P., Huntingford, C., Jones, C. D., and Luke, C. M.: Sensitivity of tropical carbon to climate change constrained by carbon dioxide variability, Nature, 494, 341–344, https://doi.org/10.1038/nature11882, 2013. a

Cox, P. M., Huntingford, C., and Williamson, M. S.: Emergent constraint on equilibrium climate sensitivity from global temperature variability, Nature, 553, 319–322, https://doi.org/10.1038/nature25450, 2018. a

Deangelis, A. M., Qu, X., Zelinka, M. D., and Hall, A.: An observational radiative constraint on hydrologic cycle intensification, Nature, 528, 249–253, https://doi.org/10.1038/nature15770, 2015. a

Deser, C., Phillips, A., Bourdette, V., and Teng, H.: Uncertainty in climate change projections: The role of internal variability, Clim. Dynam., 38, 527–546, https://doi.org/10.1007/s00382-010-0977-x, 2012. a

Dessler, A., Ye, H., Wang, T., Schoeberl, M., Oman, L., Douglass, A., Butler, A., Rosenlof, K., Davis, S., and Portmann, R.: Transport of ice into the stratosphere and the humidification of the stratosphere over the 21st century, Geophys. Res. Lett., 43, 2323–2329, https://doi.org/10.1002/2016GL067991, 2016. a